- 03 Jan 2025

- 4 Minutes to read

- Print

- DarkLight

- PDF

Top Tips

- Updated on 03 Jan 2025

- 4 Minutes to read

- Print

- DarkLight

- PDF

Before going into the samples we have below, here are a few top tips we think you will find useful.

Include the time

When you are creating a query for the BAM feature you will need to include one of the below 2 fields:

- TimeStamp (App Insights)

- TimeGenerated (Log Analytics)

These fields are default fields on these data sources and we will use them to execute the time filters when querying to search for records.

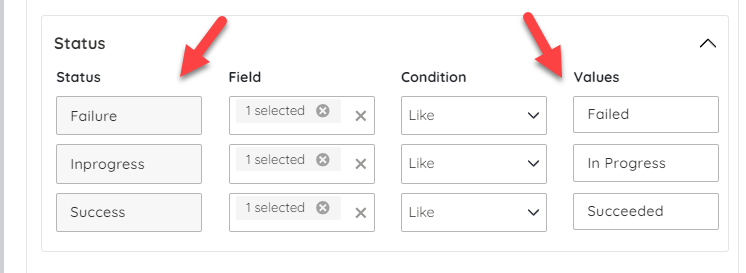

Mapping status fields for the transaction

You will often want to show a status which you can then map to Turbo360 so it displays a coloured indicator to the user of the status. This configuration is set in the below image at either shape or transaction level.

One of the things I like to do is to setup a field in my KQL query where i will provide this mapping with a case statement. This means I can control the logic in my query if I want to use different fields to indicate that the process is complete, in progress.

Below is an example of how I can do this in KQL:

| extend OverallStatus = case(

EndSuccess == 'True', 'Succeeded',

EndSuccess == 'False', 'Failed',

'In Progress')

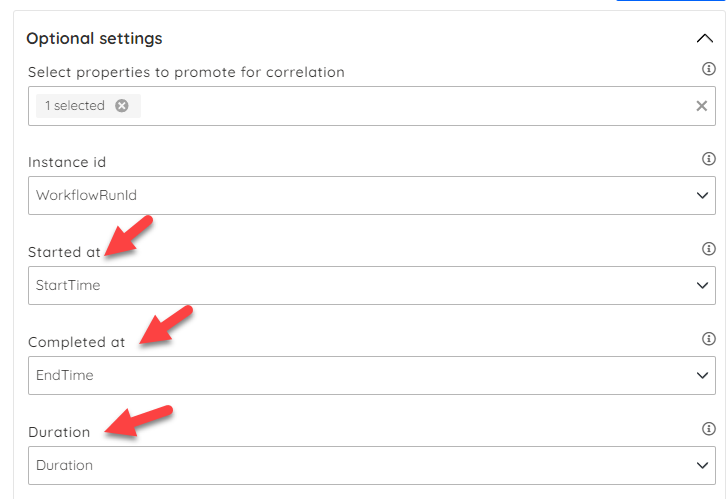

Start, End and Duration

In Turbo360 you can map columns to the start, end and duration fields which are default (optional) fields to display which we will use in the user interface if you provide them. They can be used at both transaction and stage level.

The first tip is that if your stage is very short running you may choose to skip this bit and just focus on matching a log event to the shape and its status. You will be very likely to want to use these at transaction level however.

At transaction level, I normally use the timestamp on the start event and the timestamp on the end event to then workout what the overall transaction start and end times are.

I would then use the extend operator to produce a new field as shown below to subtract the start time from the end time and have a calculated duration which I can then return back to Turbo360.

| extend Duration = EndTime - StartTime

Links to Azure

You can add a link to your query which will give you a url for the Azure Portal which is the url to open the resource for the right run id. Below are some examples of that.

Logic App Consumption

In this example I get the base url for the Logic App run and then I will dynamically append the run id to it in the output query.

//This is the url from Azure Portal for this logic app for a run history but with the run id taken off as we will append it later dynamically

let inputLogicAppRunPortalUrl = "https://portal.azure.com/#view/Microsoft_Azure_EMA/DesignerEditorConsumption.ReactView/id/%2Fsubscriptions%2F08a281b8-3b07-4219-a517-b11230e9b34f%2FresourceGroups%2FEAI_App_EmployeeBenefitsFiles%2Fproviders%2FMicrosoft.Logic%2Fworkflows%2FEmployeeBenefits-To-BenefitsManagement-Partner/location/northeurope/showGoBackButton~/true/isReadOnly~/true/isMonitoringView~/true/runId/%2Fsubscriptions%2F08a281b8-3b07-4219-a517-b11230e9b34f%2FresourceGroups%2FEAI_App_EmployeeBenefitsFiles%2Fproviders%2FMicrosoft.Logic%2Fworkflows%2FEmployeeBenefits-To-BenefitsManagement-Partner%2Fruns%2F";

AzureDiagnostics

| where ResourceProvider == "MICROSOFT.LOGIC"

| where ResourceGroup == "EAI_APP_EMPLOYEEBENEFITSFILES"

| where resource_workflowName_s == "EmployeeBenefits-To-BenefitsManagement-Partner"

| where ResourceType == "WORKFLOWS/RUNS/ACTIONS"

| where OperationName == "Microsoft.Logic/workflows/workflowActionCompleted"

| where Resource == "HTTP_-_GET_EMPLOYEE_BENEFITS_DATASET"

| extend FileName = trackedProperties_fileName_s

| extend WorkFlowName = resource_workflowName_s

| extend WorkFlowRunID = resource_runId_s

//Here I concat the url to show the portal url using the earlier parameter

| extend PortalUrl = strcat(inputLogicAppRunPortalUrl, resource_runId_s)

Data Factory

In the below example I took the url from a run of my data factory and used the string concat function in KQL to inject in the run id dynamically from the query.

If you refer to the Data Factory sample you will see this in use.

| extend PortalUrl = strcat("https://adf.azure.com/en/monitoring/pipelineruns/", runId_g, "?factory=%2Fsubscriptions%2F", inputSubscriptionId, "%2FresourceGroups%2F", inputResourceGroupName, "%2Fproviders%2FMicrosoft.DataFactory%2Ffactories%2F", inputDataFactory)

API Management

If you want to create a click through property on your BAM shape which allows you to click a link on the details of the BAM shape which will open up the Azure Portal and open App Insights and display the call tree showing from the request down to through the dependencies etc then you can use the below query.

On the BAM parent query you would promote for correlation the "ItemId" field on the parent query of App Insights. You can then on the child shape query the request log for that row and in KQL use the below query to create a clickable link which will jump you out to the Azure Portal and open the right query. Note in the query you will need to replace the parameters with your subscription id, resource group and app insights name.

let input_item_id = {itemId};

let resourceGroup = "Platform";

let subscription = "08a281b8-3b07-4219-a517-b11230e9b34f";

let appInsightsName = "kv-eai-apim-appinsights";

let portalUrlTemplate = "https://portal.azure.com/#blade/AppInsightsExtension/DetailsV2Blade/DataModel/%7B%22eventId%22:%22[RequestID]%22,%22timestamp%22:%22[startTime]%22%7D/ComponentId/%7B%22Name%22:%22[appInsightsName]%22,%22ResourceGroup%22:%22[resourceGroup]%22,%22SubscriptionId%22:%22[subscription]%22%7D";

let url_replace_subscription = replace_string(portalUrlTemplate, "[subscription]", subscription);

let url_replace_resource_group = replace_string(url_replace_subscription, "[resourceGroup]", resourceGroup);

let url_replace_app_insights_name = replace_string(url_replace_resource_group, "[appInsightsName]", appInsightsName);

requests

| where itemId == input_item_id

| extend azure_portal_url = replace_string(replace_string(url_replace_app_insights_name, "[RequestID]", itemId), "[startTime]", tostring(datetime_add('hour', -1, timestamp)))

Sampling

Remember that some of the logging approaches you may be using might not offer guaranteed delivery. An example of this might be:

- App Insights you might have sampling turned on

- Log Analytics and App Insights may not guarantee delivery under load

If you need those guaranteed delivery logs then consider the Turbo360 BAM API & its push model. If you are happy to reuse the logging you might already have in place but are looking to get more then use the pull model described here